DeepTrace Documentation

Welcome to the comprehensive documentation for DeepTrace - a cutting-edge, non-intrusive distributed tracing framework designed specifically for microservices architectures.

What is DeepTrace?

DeepTrace is a revolutionary distributed tracing framework that enables accurate end-to-end observation of request execution paths in microservices environments without requiring any code instrumentation. By leveraging advanced eBPF technology and intelligent transaction semantics, DeepTrace achieves over 95% tracing accuracy even under high concurrency scenarios.

Key Innovations

🚀 Non-Intrusive Design

No code changes required - DeepTrace works out of the box with your existing applications

🔍 Protocol-Aware Intelligence

Supports 20+ application protocols (HTTP, gRPC, Redis, MongoDB, etc.) with intelligent parsing

🧠 Transaction-Based Correlation

Uses dual-phase transaction inference with API affinity and persistent field similarity

⚡ High Performance

94% reduction in transmission overhead compared to traditional tracing frameworks

Architecture Overview

DeepTrace consists of two main components:

- Agent: Deployed on each host, responsible for non-intrusive request collection and span correlation through eBPF

- Server: Runs in Kubernetes clusters, handles trace assembly from correlated spans and provides query services

graph TB

subgraph "Host 1"

A1[Application 1]

A2[Application 2]

AG1[DeepTrace Agent]

A1 --> AG1

A2 --> AG1

end

subgraph "Host 2"

A3[Application 3]

A4[Application 4]

AG2[DeepTrace Agent]

A3 --> AG2

A4 --> AG2

end

subgraph "DeepTrace Server Cluster"

S[DeepTrace Server]

DB[(Elasticsearch)]

WEB[Web Interface]

DB --> S

S --> WEB

end

AG1 --> DB

AG2 --> DB

Core Features

1. Protocol-Aware Span Construction

- eBPF-based packet capture for non-intrusive monitoring

- Protocol templates for accurate parsing of 20+ protocols

- Smart request boundary detection using length-field jumps and full parsing

- Efficient span creation with critical metadata extraction

2. Transaction-Based Span Correlation

- Nested API affinity: Traffic intensity correlations using Pearson coefficients

- Persistent field similarity: TF-IDF-weighted cosine similarity for transaction field isolation

- Entropy-weighted adaptive scoring: Intelligent fusion of transaction semantics and causality metrics

- 15% reduction in misattributions compared to traditional delay/FIFO methods

3. Query-Driven Trace Assembly

- On-host compression and dual-indexing for minimal overhead

- Iterative trace reconstruction based on operator queries

- Tag-based inverted indexes and metric histograms

- 94% reduction in transmission overhead while maintaining query flexibility

Quick Navigation

🚀 Getting Started

New to DeepTrace? Start here:

- Quick Start Guide - Get up and running in 10 minutes

- Installation - Detailed installation instructions

- All-in-One Deployment - Single-host setup for testing

📖 User Guide

Learn how to use DeepTrace effectively:

- Basic Usage - Essential operations and workflows

- Deployment Modes - Choose the right deployment strategy

- Trace Analysis - Analyze and interpret traces

🏗️ Architecture & Implementation

Understand how DeepTrace works:

- System Overview - High-level architecture

- eBPF Implementation - Deep dive into eBPF components

- Advanced Topics - Advanced features and algorithms

🔧 Development & Testing

For developers and contributors:

- Testing Guide - Comprehensive testing strategies

- API Reference - Complete API documentation

- Troubleshooting - Common issues and solutions

Supported Environments

DeepTrace has been tested and verified on:

- Operating System: Ubuntu 24.04 LTS

- Kernel Version: 6.8.0-55-generic or later

- Container Runtime: Docker v26.1.3+

- Orchestration: Kubernetes 1.20+

Community & Support

- GitHub Repository: DeepShield-AI/DeepTrace

- Issues & Bug Reports: GitHub Issues

- Discussions: GitHub Discussions

License

DeepTrace is released under the MIT License.

Quick Start Guide

Get DeepTrace up and running in just 10 minutes! This guide will walk you through the fastest way to deploy DeepTrace and start collecting traces from your applications.

Prerequisites

Before you begin, ensure you have:

- Ubuntu 24.04 LTS (or compatible Linux distribution)

- Kernel version 4.7.0+ with eBPF support

- Docker 26.1.3+ installed and running

- 40GB+ free disk space

- Root/sudo access

- Internet connectivity

Step 1: Clone the Repository

git clone https://github.com/DeepShield-AI/DeepTrace.git

cd DeepTrace

⚠️ Important: Do not clone into

/etcdirectory as the agent will use this path later.

Step 2: Quick Configuration

- To deploy DeepTrace, you must fill in the following fields in the

server/config/config.tomlin order to run it. These required fields are presented in the configuration file in the format of xxx. In all-in-one mode, theserver.ipandagents.agent_info.host_ipvalues are identical.

Edit the configuration file and fill in these required fields:

| Configuration Item | Description |

|---|---|

server.ip | The external IP address of the host running the DeepTrace server and the Elastic database |

elastic.elastic_password | Password for Elastic |

agents.agent_info.agent_name | Name of the agent, which uniquely identifies each agent instance |

agents.agent_info.user_name | The username for logging into the host where the agent is located via SSH |

agents.agent_info.host_ip | IP address of the agent host |

agents.agent_info.ssh_port | SSH port of the agent host (usually 22) |

agents.agent_info.host_password | The password for logging into the host where the agent is located via SSH |

Step 3: Deploy DeepTrace Server

Launch the DeepTrace server and Elasticsearch database:

sudo bash scripts/deploy_server.sh

This command will:

- Pull required Docker images

- Start Elasticsearch database

- Launch DeepTrace server

- Set up the web interface

Verify deployment:

sudo docker ps | grep deeptrace

You should see containers running for deeptrace_server and elasticsearch.

Step 4: Access Elasticsearch Web Interface

Open your browser and navigate to:

http://YOUR_SERVER_IP:5601

Login credentials:

- Username:

elastic - Password:

YOUR_ELASTIC_PASSWORD(from Step 2)

Step 5: Deploy a Microservice Application

To generate traces, deploy a test microservice application:

Choose an application: See Workload Applications for detailed deployment instructions.

Step 6: Install and Start Agent

Install the DeepTrace agent on your host:

# Install agent (compiles from source)

sudo docker exec -it deeptrace_server python -m cli.src.cmd agent install

# Start collecting traces

sudo docker exec -it deeptrace_server python -m cli.src.cmd agent run

The agent will automatically:

- Compile eBPF programs

- Start monitoring all Docker containers

- Begin collecting network traces

- Send data to the server

Step 7: Generate Sample Traffic

Generate traffic to your deployed microservice application:

Follow the traffic generation instructions in Workload Applications for your chosen application.

Step 8: Build and View Traces

Correlate spans and assemble traces:

# Perform span correlation using DeepTrace algorithm

sudo docker exec -it deeptrace_server python -m cli.src.cmd asso algo deeptrace

# Assemble traces from correlated spans

sudo docker exec -it deeptrace_server python -m cli.src.cmd assemble

Step 9: Explore Your Traces

- Elasticsearch Web Interface: Visit

http://YOUR_SERVER_IP:5601 - Navigate to Discover: Click on "Discover" in the left sidebar

- Select Index: Choose the trace index pattern

- View Traces: Explore collected traces with rich metadata

Verification Checklist

✅ Server Running: sudo docker ps | grep deeptrace_server

✅ Agent Connected: Check agent status in web interface

✅ Traces Collected: Verify traces appear in Elasticsearch

✅ Elasticsearch Web Interface Accessible: Can login and view data

Clean Up

To remove DeepTrace and all components:

sudo bash scripts/clear.sh

This will stop and remove all containers, networks, and temporary files.

Next Steps

Congratulations! You now have DeepTrace running and collecting traces. Here's what to explore next:

- Configuration Guide: Customize DeepTrace for your environment

- Basic Usage: Learn essential operations

- Architecture Overview: Understand how DeepTrace works

- Troubleshooting: Resolve common issues

Need Help?

- Common Issues: Check our troubleshooting guide

- GitHub Issues: Report bugs or ask questions

- Documentation: Explore the full documentation

Installation Guide

This guide provides comprehensive installation instructions for DeepTrace. Choose the installation method that best fits your environment and requirements.

Installation Methods

DeepTrace can be installed using two primary methods:

-

Docker Installation (Recommended)

- Fastest and most reliable method

- Pre-built environment with all dependencies

- Ideal for production deployments

-

- Build from source code

- Full control over compilation process

- Required for custom modifications

System Requirements

Minimum Requirements

| Component | Requirement |

|---|---|

| Operating System | Ubuntu 24.04 LTS (or compatible) |

| Kernel Version | 4.7.0+ with eBPF support |

| Memory | 8GB recommended |

| Storage | 40GB free disk space |

| CPU | 2 cores minimum, 4+ recommended |

| Network | Internet connectivity for downloads |

Software Dependencies

- Docker: v26.1.3 or later

- Container Runtime: Docker Engine or compatible

- Shell: Bash 4.0+

- Privileges: Root or sudo access

Kernel Requirements

DeepTrace requires specific kernel features:

# Check kernel version

uname -r

# Verify eBPF support

zgrep CONFIG_BPF /proc/config.gz

zgrep CONFIG_BPF_SYSCALL /proc/config.gz

zgrep CONFIG_BPF_JIT /proc/config.gz

All should return =y or =m.

Pre-Installation Checklist

Before installing DeepTrace, verify your system meets all requirements:

1. System Compatibility

# Check OS version

lsb_release -a

# Check available disk space

df -h

# Check memory

free -h

# Verify Docker installation

sudo docker --version

2. Network Configuration

# Test internet connectivity

ping -c 3 github.com

# Check if required ports are available

netstat -tuln | grep -E ':(5601|7901|9200|52001)'

3. Permissions

# Verify sudo access

sudo whoami

# Check Docker permissions

sudo docker ps

Installation Overview

The installation process involves several key steps:

- Environment Setup: Prepare the host system

- Repository Clone: Download DeepTrace source code

- Configuration: Set up configuration files

- Server Deployment: Install server components

- Agent Installation: Deploy monitoring agents

- Verification: Confirm successful installation

Quick Installation

For users who want to get started immediately:

# Clone repository

git clone https://github.com/DeepShield-AI/DeepTrace.git

cd DeepTrace

# Quick setup with Docker (recommended)

sudo bash scripts/install_agent.sh

This script will:

- Pull necessary Docker images

- Set up basic configuration

- Deploy agent component

Deployment Modes

DeepTrace supports multiple deployment configurations:

Single Host (All-in-One)

- Server and agent on the same machine

- Ideal for testing and small deployments

- Simplified configuration and management

Distributed Deployment

- Server cluster with multiple agents

- Production-ready scalability

- Advanced configuration options

Post-Installation

After successful installation:

- Verify Services: Ensure all components are running

- Access Elasticsearch Web Interface: Connect to the management dashboard

- Test Functionality: Generate sample traces

Troubleshooting Installation

Common installation issues and solutions:

Docker Issues

# Fix Docker permissions

sudo usermod -aG docker $USER

newgrp docker

# Restart Docker service

sudo systemctl restart docker

Port Conflicts

# Check port usage

sudo netstat -tuln | grep :PORT_NUMBER

# Kill conflicting processes

sudo fuser -k PORT_NUMBER/tcp

Insufficient Resources

# Check system resources

htop

df -h

free -h

# Clean up disk space

docker system prune -a

Next Steps

After installation, proceed to:

- Configuration Guide: Customize your deployment

- Quick Start: Begin collecting traces

- Basic Usage: Learn essential operations

Support

If you encounter issues during installation:

- Check Prerequisites: Verify all requirements are met

- Review Logs: Examine installation logs for errors

- Consult Documentation: Check specific installation method guides

- Community Support: Visit our GitHub Issues

Docker Installation

The Docker installation method is the recommended approach for deploying DeepTrace. It provides a pre-configured environment with all dependencies, ensuring consistent and reliable deployments across different systems.

Prerequisites

System Requirements

- Ubuntu 24.04 LTS (or compatible Linux distribution)

- Kernel 4.7.0+ with eBPF support

- 40GB+ free disk space

- 8GB+ RAM

- Internet connectivity

Docker Installation

If Docker is not already installed, you can install Docker by following the official instructions: Docker Installation

1. Verify Docker Installation

# Check Docker version

sudo docker --version

# Test Docker installation

sudo docker run hello-world

DeepTrace Docker Installation

Step 1: Clone Repository

git clone https://github.com/DeepShield-AI/DeepTrace.git

cd DeepTrace

⚠️ Important: Do not clone into

/etcdirectory as the agent will use this path during deployment.

Step 2: Configure Docker Registry

DeepTrace uses a private Docker registry for pre-built images. Configure Docker to access it:

Edit Docker Daemon Configuration

sudo nano /etc/docker/daemon.json

Add the following configuration:

{

"insecure-registries": ["47.97.67.233:5000"]

}

Note: This configuration allows HTTP connections to the private registry.

Restart Docker Service

sudo systemctl daemon-reload

sudo systemctl restart docker

Step 3: Pull DeepTrace Images

# Pull the main DeepTrace image

sudo docker pull 47.97.67.233:5000/deepshield/deeptrace:latest

# Verify image download

sudo docker images | grep deeptrace

Step 4: Compile Agent

Use the Docker container to compile the DeepTrace agent:

# Navigate to DeepTrace directory

cd DeepTrace

# Compile using Docker container

sudo docker run --privileged --rm -it \

-v $(pwd):/DeepTrace \

47.97.67.233:5000/deepshield/deeptrace:latest \

bash -c 'cd /DeepTrace/agent && cargo xtask build --profile release'

This command will:

- Mount your local DeepTrace directory into the container

- Compile the agent with release optimizations

- Generate the binary at

agent/target/x86_64-unknown-linux-gnu/release/deeptrace

Step 5: Configure DeepTrace

# Copy example configuration

cd agent

cp config/deeptrace.toml.example config/deeptrace.toml

# Edit configuration file

nano config/deeptrace.toml

Update the configuration with your specific settings. See the Configuration Guide for detailed options.

Step 6: Test Agent

# Test the compiled agent

sudo RUST_LOG=info ./target/x86_64-unknown-linux-gnu/release/deeptrace -c config/deeptrace.toml

Verification

1. Verify Agent Compilation

# Check if agent binary exists

ls -la target/x86_64-unknown-linux-gnu/release/deeptrace

# Test agent help

./target/x86_64-unknown-linux-gnu/release/deeptrace --help

Troubleshooting

Common Docker Issues

Permission Denied

# Add user to docker group

sudo usermod -aG docker $USER

newgrp docker

Port Already in Use

# Check what's using the port

sudo netstat -tuln | grep :5601

# Kill the process

sudo fuser -k 5601/tcp

Image Pull Failures

# Check Docker daemon configuration

sudo systemctl status docker

# Restart Docker

sudo systemctl restart docker

# Try pulling again

docker pull 47.97.67.233:5000/deepshield/deeptrace:latest

Compilation Errors

# Check available disk space

df -h

# Clean Docker cache

docker system prune -a

# Retry compilation with verbose output

sudo docker run --privileged --rm -it \

-v $(pwd):/DeepTrace \

47.97.67.233:5000/deepshield/deeptrace:latest \

bash -c 'cd /DeepTrace/agent && RUST_LOG=debug cargo xtask build --profile release'

Resource Issues

Insufficient Memory

# Check memory usage

free -h

# Increase Docker memory limit (if using Docker Desktop)

# Go to Docker Desktop Settings > Resources > Memory

Disk Space

# Clean up Docker resources

sudo docker system prune -a --volumes

# Remove unused images

sudo docker image prune -a

Next Steps

After successful Docker installation:

- Configuration: Customize your deployment

- All-in-One Deployment: Quick setup for testing

- Basic Usage: Start using DeepTrace

Alternative: Manual Compilation

If you prefer to compile from source without Docker, see the Manual Compilation Guide.

Manual Compilation

This guide walks you through compiling DeepTrace from source code. Manual compilation gives you full control over the build process and is required for custom modifications or when Docker is not available.

When to Use Manual Compilation

Choose manual compilation when you need to:

- Customize the build process or modify source code

- Work in environments where Docker is not available

- Understand the dependencies and build process in detail

- Optimize for specific hardware or kernel configurations

💡 Tip: For most users, the Docker installation method is faster and more reliable.

Prerequisites

System Requirements

- Ubuntu 24.04 LTS (recommended) or compatible Linux distribution

- Kernel 4.7.0+ with eBPF support and BTF information

- 20GB+ free disk space

- 8GB+ RAM

- Internet connectivity for downloading dependencies

Required Packages

The following packages must be installed before compilation:

# Update package lists

sudo apt-get update

# Install essential build tools

sudo apt-get install -y --no-install-suggests --no-install-recommends \

build-essential \

clang \

llvm-18 \

llvm-18-dev \

llvm-18-tools \

curl \

ca-certificates \

git \

make \

libelf-dev \

libclang-18-dev \

pkg-config \

libssl-dev \

openssl

Step-by-Step Installation

Step 1: Set Up Environment Variables

Configure LLVM environment variables for the build process:

# Set LLVM paths

export LLVM_PATH=/lib/llvm-18

export PATH=$PATH:/lib/llvm-18/bin

# Make changes persistent

echo "export LLVM_PATH=/lib/llvm-18" >> ~/.bashrc

echo "export PATH=\$PATH:/lib/llvm-18/bin" >> ~/.bashrc

source ~/.bashrc

# Verify LLVM installation

llvm-config-18 --version

clang-18 --version

Step 2: Build and Install libbpf

DeepTrace requires libbpf for eBPF functionality:

# Clone libbpf repository

git clone https://github.com/libbpf/libbpf.git --branch libbpf-1.6.2 --depth 1

cd libbpf/src

# Build with static linking only

BUILD_STATIC_ONLY=y make -j$(nproc)

# Install system-wide

sudo make install

# Update library cache

sudo ldconfig

# Verify installation

pkg-config --modversion libbpf

Step 3: Install Rust Toolchain

DeepTrace is written in Rust and requires specific toolchain components:

# Install Rust using rustup

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh -s -- -y --default-toolchain=stable

# Add Rust to PATH

echo "export PATH=\$PATH:\$HOME/.cargo/bin" >> ~/.bashrc

source ~/.bashrc

# Verify Rust installation

rustc --version

cargo --version

Step 4: Configure Rust for eBPF Development

Install additional Rust components needed for eBPF compilation:

# Add Rust source code (required for eBPF)

rustup component add rust-src

# Install nightly toolchain with rust-src

rustup toolchain install nightly --component rust-src

# Add target for cross-compilation (if needed)

rustup target add aarch64-unknown-linux-gnu

# Install BPF linker

cargo install bpf-linker

# Verify BPF linker installation

bpf-linker --version

Step 5: Clone DeepTrace Repository

# Clone the repository

git clone https://github.com/DeepShield-AI/DeepTrace.git

cd DeepTrace

# Check repository structure

ls -la

Step 6: Compile DeepTrace

Now compile the DeepTrace agent with optimizations:

cd agent

# Compile with release profile for optimal performance

cargo xtask build --profile release

# The compilation process will:

# 1. Build eBPF programs

# 2. Compile Rust userspace components

# 3. Link everything together

Expected compilation time: 10-30 minutes depending on your hardware.

Step 7: Verify Compilation

Check that the compilation was successful:

# Verify agent binary exists

ls -la target/x86_64-unknown-linux-gnu/release/deeptrace

# Check binary size and permissions

file target/x86_64-unknown-linux-gnu/release/deeptrace

# Test help output

./target/x86_64-unknown-linux-gnu/release/deeptrace --help

Step 8: Set Up Configuration

# Copy example configuration

cp config/deeptrace.toml.example config/deeptrace.toml

# Edit configuration as needed

nano config/deeptrace.toml

Step 9: Test the Agent

Run a basic test to ensure the agent works correctly:

# Test with info logging

RUST_LOG=info cargo xtask run -c config/deeptrace.toml

# Or run the binary directly

sudo RUST_LOG=info ./target/x86_64-unknown-linux-gnu/release/deeptrace -c config/deeptrace.toml

Advanced Build Options

Debug Build

For development and debugging:

# Build with debug symbols

cargo xtask build --profile debug

# Run with debug logging

RUST_LOG=debug cargo xtask run -c config/deeptrace.toml

Custom Features

Enable or disable specific features:

# Build with specific features

# todo: feature is currently not supported

cargo xtask build --profile release --features "feature1,feature2"

# Build without default features

cargo xtask build --profile release --no-default-features

Cross-Compilation

For different architectures:

# Add target architecture

rustup target add aarch64-unknown-linux-gnu

# Cross-compile for ARM64

cargo xtask build --profile release --target aarch64-unknown-linux-gnu

Troubleshooting Compilation Issues

Common Build Errors

LLVM/Clang Issues

# Verify LLVM installation

which clang-18

llvm-config-18 --version

# Reinstall if necessary

sudo apt-get install --reinstall llvm-18 clang-18

libbpf Linking Errors

# Check libbpf installation

pkg-config --libs libbpf

# Rebuild libbpf if necessary

cd libbpf/src

make clean

BUILD_STATIC_ONLY=y make -j$(nproc)

sudo make install

sudo ldconfig

Rust Compilation Errors

# Update Rust toolchain

rustup update

# Clean build cache

cargo clean

# Rebuild with verbose output

cargo xtask build --profile release -- --verbose

eBPF Compilation Errors

# Check kernel headers

ls /usr/src/linux-headers-$(uname -r)/

# Install kernel headers if missing

sudo apt-get install linux-headers-$(uname -r)

# Verify BTF support

ls /sys/kernel/btf/vmlinux

Memory Issues During Compilation

If compilation fails due to insufficient memory:

# Check available memory

free -h

# Reduce parallel jobs

cargo xtask build --profile release -j 1

# Or increase swap space

sudo fallocate -l 4G /swapfile

sudo chmod 600 /swapfile

sudo mkswap /swapfile

sudo swapon /swapfile

Disk Space Issues

# Check available space

df -h

# Clean Rust cache

cargo clean

# Remove target directory

rm -rf target/

# Clean package cache

sudo apt-get clean

Development Setup

For ongoing development work:

# Install development tools

cargo install cargo-watch cargo-expand

# Set up pre-commit hooks

git config core.hooksPath .githooks

# Run tests

cargo test

# Format code

cargo +nightly fmt

# Run linter

cargo xtask clippy

Next Steps

After successful manual compilation:

- Configuration Guide: Set up your deployment

- Testing Guide: Verify your build

- Development Setup: Set up for development

References

- Ubuntu Installation Guide

- LLVM Documentation

- libbpf GitHub Repository

- Rust Installation Guide

- Aya eBPF Framework

- BPF Linker Documentation

Configuration Guide

This comprehensive guide covers all aspects of configuring DeepTrace for your specific environment and requirements. DeepTrace consists of two main components that require separate configuration: the Server and the Agent.

Configuration Overview

DeepTrace uses TOML configuration files to manage settings. The configuration system is designed to be:

- Simple: Straightforward configuration structure

- Flexible: Support for multiple deployment scenarios

- Secure: Sensitive information can be externalized

- Validated: Configuration is checked at startup

Configuration Files

DeepTrace provides several configuration files:

- Server:

server/config/config.toml- Server and agent management configuration - Agent:

agent/config/deeptrace.toml- Agent-side configuration (current) - Agent Template:

agent/config/deeptrace.toml.example- Agent configuration template - Prism:

agent/config/prism.toml- Lightweight monitoring configuration

Server Configuration

The server configuration manages the DeepTrace server, Elasticsearch integration, and agent deployment settings. The main configuration file is located at server/config/config.toml.

Required Server Configuration

The server configuration is simple and requires only essential fields:

Server Settings

[server]

# External IP address of the DeepTrace server (REQUIRED)

ip = "192.168.1.100" # Replace with your server's IP

Elasticsearch Configuration

[elastic]

# Elasticsearch password (REQUIRED - choose a secure password)

elastic_password = "your_secure_password_here"

Agent Management Configuration

The server manages agent deployments through SSH connections:

Single Agent Configuration

[[agents]]

[agents.agent_info]

# Unique identifier for this agent (REQUIRED)

agent_name = "agent-1"

# SSH connection details (ALL REQUIRED)

user_name = "ubuntu" # SSH username

host_ip = "192.168.1.101" # Agent host IP

ssh_port = 22 # SSH port (usually 22)

host_password = "ssh_password" # SSH password (consider using SSH keys)

Multiple Agents Configuration

# Agent 1 - Web servers

[[agents]]

[agents.agent_info]

agent_name = "agent-1"

user_name = "ubuntu"

host_ip = "192.168.1.101"

ssh_port = 22

host_password = "password1"

# Agent 2 - Database servers

[[agents]]

[agents.agent_info]

agent_name = "agent-2"

user_name = "ubuntu"

host_ip = "192.168.1.102"

ssh_port = 22

host_password = "password2"

# Agent 3 - Cache servers

[[agents]]

[agents.agent_info]

agent_name = "agent-3"

user_name = "ubuntu"

host_ip = "192.168.1.103"

ssh_port = 22

host_password = "password3"

Agent Configuration

The agent configuration defines how the DeepTrace agent operates on target systems. The main configuration file is agent/config/deeptrace.toml.

Required Agent Configuration

Basic Agent Settings

[agent]

name = "deeptrace" # Agent identifier (required)

Configuration Modules

Metric Collection Configuration

[metric]

interval = 10 # Metric collection interval (seconds)

sender = "metric" # Sender configuration name for metrics

Data Sending Configuration

File-based Storage for Metrics

[sender.file.metric]

path = "metrics.csv" # File path for metrics storage

rotate = true # Enable file rotation

max_size = 512 # Maximum file size (MB)

max_age = 7 # Maximum retention (days)

rotate_time = 10 # Rotation interval (days)

data_format = "%Y%m%d" # Timestamp format for rotation

Elasticsearch Sender for Traces

[sender.elastic.trace]

node_urls = "http://localhost:9200" # Elasticsearch URL

username = "elastic" # Elasticsearch username

password = "your_password" # Elasticsearch password

request_timeout = 10 # Request timeout (seconds)

index_name = "agent1" # Index name for this agent

bulk_size = 32 # Bulk operation size

Tracing Configuration

[trace]

ebpf = "trace" # eBPF configuration name for tracing

sender = "trace" # Sender configuration name for traces

[trace.span]

cleanup_interval = 30 # Cleanup interval for expired spans (seconds)

max_sockets = 1024 # Maximum tracked socket count

eBPF Configuration

[ebpf.trace]

log_level = 1 # Log level: 0=off, 1=debug, 3=verbose, 4=stats

pids = [523094] # Process IDs to monitor (specific PIDs)

max_buffered_events = 128 # Maximum events processed per batch

enabled_probes = [ # List of enabled system call probes

"sys_enter_read",

"sys_exit_read",

"sys_enter_readv",

"sys_exit_readv",

"sys_enter_recvfrom",

"sys_exit_recvfrom",

"sys_enter_recvmsg",

"sys_exit_recvmsg",

"sys_enter_recvmmsg",

"sys_exit_recvmmsg",

"sys_enter_write",

"sys_exit_write",

"sys_enter_writev",

"sys_exit_writev",

"sys_enter_sendto",

"sys_exit_sendto",

"sys_enter_sendmsg",

"sys_exit_sendmsg",

"sys_enter_sendmmsg",

"sys_exit_sendmmsg",

"sys_exit_socket",

"sys_enter_close"

]

Complete Configuration Examples

Full-Featured Agent Configuration

[agent]

name = "production-agent"

[metric]

interval = 5

sender = "metric"

[sender.file.metric]

path = "/var/log/deeptrace/metrics.csv"

rotate = true

max_size = 256

max_age = 30

rotate_time = 7

data_format = "%Y%m%d"

[sender.elastic.trace]

node_urls = "http://prod-elastic:9200"

username = "elastic"

password = "prod_password"

request_timeout = 30

index_name = "production_traces"

bulk_size = 64

[trace]

ebpf = "trace"

sender = "trace"

[trace.span]

cleanup_interval = 30

max_sockets = 10000

[ebpf.trace]

log_level = 1

enabled_probes = [

"sys_enter_read",

"sys_exit_read",

"sys_enter_recvfrom",

"sys_exit_recvfrom",

"sys_enter_write",

"sys_exit_write",

"sys_enter_sendto",

"sys_exit_sendto",

"sys_exit_socket",

"sys_enter_close"

]

max_buffered_events = 256

pids = [] # Monitor no processes

Troubleshooting Configuration

Common Server Issues

Configuration File Not Found

# Check file exists and permissions

ls -la server/config/config.toml

chmod 644 server/config/config.toml

Invalid TOML Syntax

# Validate TOML syntax

python3 -c "import toml; toml.load('server/config/config.toml')"

Agent Connection Issues

# Test SSH connectivity to agent

ssh ubuntu@192.168.1.101 -p 22

# Test DeepTrace server port

telnet 192.168.1.100 7901

Common Agent Issues

Configuration Loading Errors

# Check agent configuration syntax

cd agent/config

python3 -c "import toml; toml.load('deeptrace.toml')"

Network Connectivity

# Test server connectivity

telnet 192.168.1.100 7901

# Test Elasticsearch connectivity

curl http://192.168.1.100:9200/_cluster/health

Permission Issues

# Check eBPF capabilities

sudo setcap cap_sys_admin,cap_net_admin,cap_bpf+ep /path/to/deeptrace

# Check file permissions

ls -la agent/config/deeptrace.toml

chmod 644 agent/config/deeptrace.toml

Next Steps

After configuring DeepTrace:

- All-in-One Deployment: Deploy for testing

- Basic Usage: Start using DeepTrace

- Troubleshooting: Resolve issues

All-in-One Deployment

All-in-one deployment runs both the DeepTrace server and agent on a single host. This is the recommended starting point for new users.

🚀 Ready to start? Follow the Quick Start Guide for complete step-by-step instructions.

What is All-in-One Mode?

In all-in-one deployment, all DeepTrace components run on the same host:

┌─────────────────────────────────────────────────┐

│ Single Host (All-in-One) │

│ │

│ ┌──────────────┐ ┌──────────────┐ │

│ │ DeepTrace │◄────►│ Elasticsearch│ │

│ │ Server │ │ Database │ │

│ └──────────────┘ └──────────────┘ │

│ ▲ │

│ │ │

│ ▼ │

│ ┌──────────────┐ ┌──────────────┐ │

│ │ DeepTrace │◄────►│ Microservice │ │

│ │ Agent │ │ Apps │ │

│ └──────────────┘ └──────────────┘ │

│ │

└─────────────────────────────────────────────────┘

Key Benefits

- Simple Setup: Single command deployment

- Quick Learning: Understand all components on one host

- Easy Testing: Perfect for evaluation and development

- Minimal Resources: Requires only one host

Requirements

- OS: Ubuntu 24.04 LTS

- Memory: 8GB RAM minimum

- Storage: 40GB free space

- Docker: v26.1.3+

Key Configuration Note

In all-in-one mode, the server and agent IPs must be identical:

[server]

ip = "192.168.1.100" # Your host IP

[[agents]]

[agents.agent_info]

host_ip = "192.168.1.100" # Same as server.ip

Next Steps

- Quick Start Guide - Complete deployment walkthrough

- Configuration Guide - Detailed configuration options

For production deployments, see Deployment Modes.

Basic Usage

This guide covers essential operations for using DeepTrace after completing the initial setup. It focuses on day-to-day operations and advanced usage patterns.

Prerequisites: Complete the Quick Start Guide before using this guide.

Core Workflow

DeepTrace follows a simple workflow for distributed tracing:

1. Agent Collection → 2. Span Correlation → 3. Trace Assembly → 4. Analysis

Advanced Agent Operations

Agent Status Management

# Check agent status

sudo docker exec -it deeptrace_server python -m cli.src.cmd agent status

# Restart agent with new configuration

sudo docker exec -it deeptrace_server python -m cli.src.cmd agent restart

# View agent logs

sudo docker exec -it deeptrace_server python -m cli.src.cmd agent logs

Span Correlation

Available Algorithms

| Algorithm | Description | Use Case |

|---|---|---|

deeptrace | Advanced transaction-based correlation | Recommended for most scenarios |

fifo | Simple first-in-first-out correlation | Testing and simple applications |

Run Correlation

# Use DeepTrace algorithm (recommended)

sudo docker exec -it deeptrace_server python -m cli.src.cmd asso algo deeptrace

# Alternative: Use FIFO algorithm

sudo docker exec -it deeptrace_server python -m cli.src.cmd asso algo fifo

Trace Assembly

After correlation, assemble traces from correlated spans:

# Assemble traces

sudo docker exec -it deeptrace_server python -m cli.src.cmd assemble

Advanced Data Analysis

For basic trace viewing, see the Quick Start Guide. This section covers advanced analysis techniques.

Advanced Kibana Operations

# Create custom index patterns

# Set up advanced visualizations

# Configure dashboards for monitoring

For detailed analysis techniques, see Trace Analysis.

System Monitoring

Health Checks

# Check Elasticsearch cluster health

curl http://localhost:9200/_cluster/health

# Monitor container resource usage

sudo docker stats

# Verify DeepTrace containers

sudo docker ps | grep deeptrace

Data Management

# Clear all collected data

sudo docker exec -it deeptrace_server python -m cli.src.cmd db clear

# Delete specific Elasticsearch index

curl -X DELETE "localhost:9200/traces"

Troubleshooting

No Traces Collected

Common causes and solutions:

- Agent not running: Verify agent status and restart if needed

- No traffic: Ensure microservice applications are receiving requests

- Network issues: Check connectivity between agent and server

- Elasticsearch issues: Verify Elasticsearch is accessible and healthy

Poor Correlation Results

Optimization strategies:

- Try different algorithms: Switch between

deeptraceandfifo - Increase data collection: Ensure sufficient spans before correlation

- Check application traffic: Verify microservices are generating network activity

- Review configuration: Ensure proper agent and server configuration

Cleanup

Remove DeepTrace

To completely remove DeepTrace and all components:

sudo bash scripts/clear.sh

This will:

- Stop all containers

- Remove Docker images

- Clean up temporary files

- Reset the environment

Next Steps

- Web UI: Explore the web-based monitoring interface

- Database Setup: Advanced Elasticsearch configuration

- Workload Applications: Deploy additional test applications

- Configuration Guide: Advanced configuration options

Deployment Modes

DeepTrace supports different deployment modes to accommodate various use cases, from development and testing to production environments.

Available Deployment Modes

| Mode | Description | Use Case | Complexity |

|---|---|---|---|

| All-in-One | Single host deployment | Development, testing, learning | Simple |

| Distributed | Multi-host deployment | Production, large scale | Advanced |

All-in-One Mode

For complete all-in-one setup, see the All-in-One Deployment Guide and Quick Start Guide.

Key Characteristics

- Single host runs all components

- Simplified configuration and management

- Perfect for learning and development

- Limited scalability

Distributed Mode

Overview

Distributed deployment separates DeepTrace components across multiple hosts for production environments. This mode provides:

- Scalability: Handle large-scale distributed systems

- High Availability: Redundancy and fault tolerance

- Performance: Distributed processing and storage

- Flexibility: Independent scaling of components

Architecture

┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐

│ Server Host │ │ Agent Host 1 │ │ Agent Host 2 │

│ │ │ │ │ │

│ ┌─────────────┐ │ │ ┌─────────────┐ │ │ ┌─────────────┐ │

│ │ DeepTrace │ │ │ │ DeepTrace │ │ │ │ DeepTrace │ │

│ │ Server │◄┼────┼►│ Agent │ │ │ │ Agent │ │

│ └─────────────┘ │ │ └─────────────┘ │ │ └─────────────┘ │

│ ┌─────────────┐ │ │ ┌─────────────┐ │ │ ┌─────────────┐ │

│ │Elasticsearch│ │ │ │Microservices│ │ │ │Microservices│ │

│ │ Database │ │ │ │ Apps │ │ │ │ Apps │ │

│ └─────────────┘ │ │ └─────────────┘ │ │ └─────────────┘ │

└─────────────────┘ └─────────────────┘ └─────────────────┘

Configuration

For distributed deployment, configure multiple agents in server/config/config.toml:

[server]

ip = "192.168.1.100" # Server host IP

[[agents]]

[agents.agent_info]

agent_name = "agent-1"

host_ip = "192.168.1.101" # Different from server IP

user_name = "ubuntu"

ssh_port = 22

host_password = "password"

[[agents]]

[agents.agent_info]

agent_name = "agent-2"

host_ip = "192.168.1.102" # Another host

user_name = "ubuntu"

ssh_port = 22

host_password = "password"

Deployment Steps

- Configure server: Set up server configuration with multiple agents

- Deploy server: Run server on designated host

- Install agents: Deploy agents on target hosts

- Verify connectivity: Ensure all agents can communicate with server

- Start monitoring: Begin collecting traces across all hosts

Choosing the Right Mode

All-in-One Mode

Choose when:

- Learning DeepTrace functionality

- Developing and testing applications

- Demonstrating tracing capabilities

- Working with small-scale systems

Limitations:

- Limited to single host resources

- Not suitable for production

- No high availability

Distributed Mode

Choose when:

- Deploying to production

- Monitoring large-scale systems

- Requiring high availability

- Need independent scaling

Considerations:

- More complex setup and maintenance

- Requires network configuration

- Higher resource requirements

Next Steps

- All-in-One: Start with Quick Start Guide

- Distributed: Review Configuration Guide

- Basic Usage: Learn essential operations

- Database Setup: Configure Elasticsearch clusters

Distributed Mode

Distributed deployment separates DeepTrace components across multiple hosts for production environments. This mode provides scalability, high availability, and performance for large-scale distributed systems.

Architecture Overview

┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐

│ Server Host │ │ Agent Host 1 │ │ Agent Host 2 │

│ │ │ │ │ │

│ ┌─────────────┐ │ │ ┌─────────────┐ │ │ ┌─────────────┐ │

│ │ DeepTrace │ │ │ │ DeepTrace │ │ │ │ DeepTrace │ │

│ │ Server │◄┼────┼►│ Agent │ │ │ │ Agent │ │

│ └─────────────┘ │ │ └─────────────┘ │ │ └─────────────┘ │

│ ┌─────────────┐ │ │ ┌─────────────┐ │ │ ┌─────────────┐ │

│ │Elasticsearch│ │ │ │Microservices│ │ │ │Microservices│ │

│ │ Database │ │ │ │ Apps │ │ │ │ Apps │ │

│ └─────────────┘ │ │ └─────────────┘ │ │ └─────────────┘ │

└─────────────────┘ └─────────────────┘ └─────────────────┘

Key Benefits

- Scalability: Handle large-scale distributed systems

- High Availability: Redundancy and fault tolerance

- Performance: Distributed processing and storage

- Flexibility: Independent scaling of components

Configuration

Configure multiple agents in server/config/config.toml:

[server]

ip = "192.168.1.100" # Server host IP

[[agents]]

[agents.agent_info]

agent_name = "agent-1"

host_ip = "192.168.1.101" # Different from server IP

user_name = "ubuntu"

ssh_port = 22

host_password = "password"

[[agents]]

[agents.agent_info]

agent_name = "agent-2"

host_ip = "192.168.1.102" # Another host

user_name = "ubuntu"

ssh_port = 22

host_password = "password"

Deployment Steps

- Configure server: Set up server configuration with multiple agents

- Deploy server: Run server on designated host

- Install agents: Deploy agents on target hosts

- Verify connectivity: Ensure all agents can communicate with server

- Start monitoring: Begin collecting traces across all hosts

Requirements

- Multiple hosts: At least 2 hosts (1 server + 1+ agents)

- Network connectivity: All hosts must communicate

- SSH access: Server needs SSH access to agent hosts

- Resources: Varies by scale and workload

Use Cases

Choose distributed mode when:

- Deploying to production

- Monitoring large-scale systems

- Requiring high availability

- Need independent scaling

Considerations

- More complex setup and maintenance

- Requires network configuration

- Higher resource requirements

- Need proper security configuration

Next Steps

- Configuration Guide: Detailed configuration options

- Basic Usage: Learn essential operations

- Database Management: Configure Elasticsearch clusters

Single Host Mode

Single host mode (also known as All-in-One mode) runs both the DeepTrace server and agent on a single host. This is the recommended starting point for new users and ideal for development, testing, and learning.

Architecture Overview

┌─────────────────────────────────────────────────┐

│ Single Host (All-in-One) │

│ │

│ ┌──────────────┐ ┌──────────────┐ │

│ │ DeepTrace │◄────►│ Elasticsearch│ │

│ │ Server │ │ Database │ │

│ └──────────────┘ └──────────────┘ │

│ ▲ │

│ │ │

│ ▼ │

│ ┌──────────────┐ ┌──────────────┐ │

│ │ DeepTrace │◄────►│ Microservice │ │

│ │ Agent │ │ Apps │ │

│ └──────────────┘ └──────────────┘ │

│ │

└─────────────────────────────────────────────────┘

Key Benefits

- Simple Setup: Single command deployment

- Quick Learning: Understand all components on one host

- Easy Testing: Perfect for evaluation and development

- Minimal Resources: Requires only one host

- Fast Iteration: Quick development and testing cycles

Configuration

In single host mode, the server and agent IPs must be identical:

[server]

ip = "192.168.1.100" # Your host IP

[[agents]]

[agents.agent_info]

agent_name = "local-agent"

host_ip = "192.168.1.100" # Same as server.ip

user_name = "ubuntu"

ssh_port = 22

host_password = "password"

💡 Important:

server.ipandagents.agent_info.host_ipmust be identical.

Quick Start

For complete single host deployment, follow the Quick Start Guide:

- Clone repository and configure settings

- Deploy server (DeepTrace + Elasticsearch)

- Deploy sample app (BookInfo or Social Network)

- Install agent (compiles and starts monitoring)

- Generate traffic and build traces

- View results in Kibana dashboard

Requirements

- OS: Ubuntu 24.04 LTS

- Memory: 8GB RAM minimum

- Storage: 40GB free space

- Docker: v26.1.3+

- Network: Internet connectivity

Use Cases

Choose single host mode when:

- Learning DeepTrace functionality

- Developing and testing applications

- Demonstrating tracing capabilities

- Working with small-scale systems

- Prototyping and proof-of-concept work

Limitations

- Limited to single host resources

- Not suitable for production at scale

- No high availability

- Cannot distribute load across multiple hosts

- Limited by single host performance

Comparison with Distributed Mode

| Aspect | Single Host | Distributed |

|---|---|---|

| Complexity | Simple, single host | Complex, multiple hosts |

| Use Case | Testing, development | Production, large scale |

| Resources | 8GB RAM, 40GB disk | Varies by scale |

| Scalability | Limited to one host | Highly scalable |

| Maintenance | Easy | Requires orchestration |

| Setup Time | 10 minutes | Hours to days |

Troubleshooting

Common Issues

- Port conflicts: Ensure ports 5601, 9200, and application ports are available

- Resource constraints: Monitor memory and disk usage

- Docker issues: Verify Docker is running and has sufficient resources

- Network connectivity: Check that services can communicate

Performance Optimization

# Monitor resource usage

sudo docker stats

# Check available disk space

df -h

# Monitor memory usage

free -h

# Check system load

top

Migration to Distributed

When ready to move to production, you can migrate to distributed mode:

- Export configuration: Save current settings

- Plan architecture: Design multi-host deployment

- Configure distributed setup: Update configuration files

- Deploy incrementally: Start with server, then add agents

- Validate functionality: Ensure all components work correctly

Next Steps

- Quick Start Guide: Complete deployment walkthrough

- Distributed Mode: Learn about production deployment

- Basic Usage: Explore DeepTrace operations

- Workload Applications: Deploy test applications

Workload Applications

DeepTrace includes test microservice applications for demonstrating distributed tracing capabilities. These applications generate realistic network traffic patterns that help you understand how DeepTrace collects and correlates traces.

Available Workloads

| Application | Complexity | Services | Use Case |

|---|---|---|---|

| BookInfo | Simple | 4 services | Learning, basic testing |

| Social Network | Complex | 15+ services | Advanced testing, performance evaluation |

BookInfo Application

Overview

BookInfo is a simple microservices application that displays information about books. It consists of four services:

- Product Page: Frontend service that displays book information

- Details Service: Provides book details (author, ISBN, etc.)

- Reviews Service: Manages book reviews

- Ratings Service: Provides book ratings

Quick Deployment

# Navigate to BookInfo directory

cd tests/workload/bookinfo

# Deploy all services

sudo bash deploy.sh

Generate Traffic

# Generate test traffic to create traces

sudo bash client.sh

The client script will:

- Send HTTP requests to the product page

- Trigger inter-service communication

- Generate network traffic for DeepTrace to capture

Cleanup

# Stop and remove all services

sudo bash clear.sh

Social Network Application

Overview

Social Network is a complex microservices application that implements a Twitter-like social media platform. It includes services for:

- User management and authentication

- Timeline and post management

- Media handling and storage

- Social graph and recommendations

- Notification systems

Quick Deployment

# Navigate to Social Network directory

cd tests/workload/socialnetwork

# Deploy the full application stack

bash deploy.sh

Generate Traffic

# Generate realistic social media traffic

bash client.sh

The client generates:

- User registration and login requests

- Post creation and timeline updates

- Social interactions (likes, follows)

- Media uploads and downloads

Cleanup

# Stop and remove all services

bash clear.sh

Integration with DeepTrace

Workflow with Workloads

- Deploy DeepTrace: Follow the Quick Start Guide

- Deploy workload: Choose BookInfo or Social Network

- Start agent: Begin collecting traces

- Generate traffic: Run client scripts to create network activity

- Process traces: Run correlation and assembly

- Analyze results: View traces in Kibana

Example Complete Workflow

# 1. Deploy workload application

cd tests/workload/bookinfo

sudo bash deploy.sh

# 2. Start DeepTrace agent

sudo docker exec -it deeptrace_server python -m cli.src.cmd agent run

# 3. Generate traffic

sudo bash client.sh

# 4. Process traces

sudo docker exec -it deeptrace_server python -m cli.src.cmd asso algo deeptrace

sudo docker exec -it deeptrace_server python -m cli.src.cmd assemble

# 5. View results in Kibana at http://YOUR_SERVER_IP:5601

Additional Resources

For detailed deployment instructions and architecture information:

- BookInfo: BookInfo README

- Social Network: Social Network README

- Basic Usage: Basic Usage Guide for trace collection and analysis

BookInfo Application

BookInfo is a simple microservices application that displays information about books. It's perfect for learning distributed tracing concepts and testing DeepTrace functionality.

Application Architecture

BookInfo consists of four microservices:

┌─────────────────┐ ┌─────────────────┐

│ Product Page │◄──►│ Details Service│

│ (Frontend) │ │ │

└─────────┬───────┘ └─────────────────┘

│

▼

┌─────────────────┐ ┌─────────────────┐

│ Reviews Service │◄──►│ Ratings Service │

│ │ │ │

└─────────────────┘ └─────────────────┘

Services Overview

| Service | Description | Technology | Port |

|---|---|---|---|

| Product Page | Frontend service that displays book information | Python | 9080 |

| Details Service | Provides book details (author, ISBN, etc.) | Ruby | 9080 |

| Reviews Service | Manages book reviews | Java | 9080 |

| Ratings Service | Provides book ratings | Node.js | 9080 |

Prerequisites

- Docker and Docker Compose installed

- DeepTrace server running

- Ports 9080 available

Quick Deployment

1. Deploy BookInfo Services

Navigate to the BookInfo directory and deploy all services:

cd tests/workload/bookinfo

sudo bash deploy.sh

The deployment script will:

- Install Docker and Docker Compose (if needed)

- Pull required Docker images

- Launch all services using Docker Compose

- Set up service networking

2. Verify Deployment

Check that all services are running:

sudo docker ps | grep bookinfo

You should see containers for:

bookinfo-productpagebookinfo-detailsbookinfo-reviewsbookinfo-ratings

3. Access the Application

Open your browser and visit:

http://localhost:9080/productpage

You should see the BookInfo product page displaying book information.

Generate Traffic for Tracing

Automated Traffic Generation

Use the provided client script to generate test traffic:

sudo bash client.sh

This script will:

- Start an interactive shell inside the client container

- Issue frontend requests against the BookInfo application

- Generate HTTP traffic between microservices

- Create network traces for DeepTrace to capture

Manual Traffic Generation

You can also generate traffic manually:

# Generate multiple requests

for i in {1..100}; do

curl -s http://localhost:9080/productpage > /dev/null

echo "Request $i completed"

sleep 1

done

Traffic Patterns

The BookInfo application generates the following traffic patterns:

- Frontend Requests: User requests to product page

- Service-to-Service Calls:

- Product Page → Details Service

- Product Page → Reviews Service

- Reviews Service → Ratings Service

- Database Queries: Internal service data access

Integration with DeepTrace

Complete Workflow

-

Deploy BookInfo:

cd tests/workload/bookinfo sudo bash deploy.sh -

Start DeepTrace Agent:

sudo docker exec -it deeptrace_server python -m cli.src.cmd agent run -

Generate Traffic:

sudo bash client.sh -

Process Traces:

sudo docker exec -it deeptrace_server python -m cli.src.cmd asso algo deeptrace sudo docker exec -it deeptrace_server python -m cli.src.cmd assemble -

View Results: Access Kibana at

http://YOUR_SERVER_IP:5601

Expected Trace Data

When properly configured, you should see traces showing:

- HTTP requests between services

- Service response times

- Request flow through the microservice architecture

- Network connection details

Troubleshooting

Services Not Starting

# Check container logs

sudo docker logs bookinfo-productpage

sudo docker logs bookinfo-details

sudo docker logs bookinfo-reviews

sudo docker logs bookinfo-ratings

# Restart services

sudo docker-compose restart

No Network Traffic Captured

- Ensure DeepTrace agent is running

- Verify services are generating traffic

- Check that containers are on the same network

- Confirm eBPF programs are loaded

Port Conflicts

If port 9080 is already in use:

# Check what's using the port

sudo netstat -tulpn | grep 9080

# Stop conflicting services or modify docker-compose.yaml

Cleanup

Stop BookInfo Services

sudo bash clear.sh

This will:

- Stop all BookInfo containers

- Remove containers and networks

- Clean up Docker resources

Complete Cleanup

# Remove all BookInfo images

sudo docker rmi $(sudo docker images | grep bookinfo | awk '{print $3}')

# Remove unused networks

sudo docker network prune -f

Advanced Configuration

Custom Configuration

You can modify the docker-compose.yaml file to:

- Change service ports

- Add environment variables

- Configure resource limits

- Enable additional logging

Performance Testing

For performance testing with BookInfo:

# Install Apache Bench

sudo apt-get install apache2-utils

# Run load test

ab -n 1000 -c 10 http://localhost:9080/productpage

Next Steps

- Social Network Application: Try a more complex microservices application

- Trace Analysis: Learn how to analyze the collected traces

- Basic Usage: Explore advanced DeepTrace operations

Social Network Application

Social Network is a complex microservices application that implements a Twitter-like social media platform. It's ideal for testing DeepTrace with realistic, large-scale distributed systems.

Application Architecture

The Social Network application consists of 15+ microservices communicating via Thrift RPCs:

┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐

│ Nginx Web │◄──►│ User Service │◄──►│ User Timeline │

│ Server │ │ │ │ Service │

└─────────┬───────┘ └─────────────────┘ └─────────────────┘

│

▼

┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐

│ Compose Post │◄──►│ Post Storage │◄──►│ Home Timeline │

│ Service │ │ Service │ │ Service │

└─────────────────┘ └─────────────────┘ └─────────────────┘

│

▼

┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐

│ Social Graph │◄──►│ URL Shortener │◄──►│ Media Service │

│ Service │ │ Service │ │ │

└─────────────────┘ └─────────────────┘ └─────────────────┘

Core Services

| Service | Description | Technology | Port |

|---|---|---|---|

| Nginx Web Server | Frontend proxy and web interface | Nginx | 8080 |

| User Service | User management and authentication | C++ | 9090 |

| Compose Post Service | Post creation and processing | C++ | 9090 |

| Post Storage Service | Post data persistence | C++ | 9090 |

| User Timeline Service | Individual user timelines | C++ | 9090 |

| Home Timeline Service | Aggregated home timelines | C++ | 9090 |

| Social Graph Service | Follow relationships | C++ | 9090 |

| URL Shortener Service | URL shortening functionality | C++ | 9090 |

| Media Service | Image and video handling | C++ | 9090 |

| Text Service | Text processing and filtering | C++ | 9090 |

| Unique ID Service | Unique identifier generation | C++ | 9090 |

Supporting Infrastructure

| Component | Description | Port |

|---|---|---|

| MongoDB | Primary database | 27017 |

| Redis | Caching layer | 6379 |

| Memcached | Additional caching | 11211 |

| Jaeger | Distributed tracing (optional) | 16686 |

Prerequisites

- Docker and Docker Compose installed

- DeepTrace server running

- At least 8GB RAM available

- Ports 8080, 8081, 16686 available

- Python 3.5+ with asyncio and aiohttp

- Build dependencies: libssl-dev, libz-dev, luarocks, luasocket

Quick Deployment

1. Deploy Social Network Services

Navigate to the Social Network directory and deploy:

cd tests/workload/socialnetwork

sudo bash deploy.sh

The deployment script will:

- Install required dependencies

- Build Docker images

- Start all microservices

- Initialize databases

- Set up networking

2. Verify Deployment

Check that all services are running:

sudo docker ps | grep social

You should see containers for all microservices, databases, and supporting infrastructure.

3. Access the Application

Web Interface

Open your browser and visit:

http://localhost:8080

Media Frontend

Access the media interface at:

http://localhost:8081

Jaeger Tracing (if enabled)

View built-in traces at:

http://localhost:16686

Initialize Social Graph

Before generating traffic, initialize the social graph with users and relationships:

# Initialize with small Reed98 Facebook network

python3 scripts/init_social_graph.py --graph=socfb-Reed98

# Initialize with medium Ego Twitter network

python3 scripts/init_social_graph.py --graph=ego-twitter

# Initialize with large Twitter follows network

python3 scripts/init_social_graph.py --graph=soc-twitter-follows-mun

For remote deployments, specify IP and port:

python3 scripts/init_social_graph.py --graph=socfb-Reed98 --ip=YOUR_SERVER_IP --port=8080

Generate Traffic for Tracing

Automated Traffic Generation

Use the provided client script:

sudo bash client.sh

Manual Workload Generation

The Social Network application supports various workload patterns:

1. Compose Posts

../wrk2/wrk -D exp -t 12 -c 400 -d 300 -L \

-s ./wrk2/scripts/social-network/compose-post.lua \

http://localhost:8080/wrk2-api/post/compose -R 10

2. Read Home Timelines

../wrk2/wrk -D exp -t 12 -c 400 -d 300 -L \

-s ./wrk2/scripts/social-network/read-home-timeline.lua \

http://localhost:8080/wrk2-api/home-timeline/read -R 10

3. Read User Timelines

../wrk2/wrk -D exp -t 12 -c 400 -d 300 -L \

-s ./wrk2/scripts/social-network/read-user-timeline.lua \

http://localhost:8080/wrk2-api/user-timeline/read -R 10

Traffic Patterns

The Social Network application generates complex traffic patterns:

- User Authentication: Login/logout requests

- Post Operations: Create, read, update posts

- Timeline Operations: Home and user timeline requests

- Social Operations: Follow/unfollow, recommendations

- Media Operations: Image/video upload and retrieval

- Database Operations: MongoDB and Redis queries

- Cache Operations: Memcached read/write operations

Integration with DeepTrace

Complete Workflow

-

Deploy Social Network:

cd tests/workload/socialnetwork sudo bash deploy.sh -

Initialize Social Graph:

python3 scripts/init_social_graph.py --graph=socfb-Reed98 -

Start DeepTrace Agent:

sudo docker exec -it deeptrace_server python -m cli.src.cmd agent run -

Generate Traffic:

sudo bash client.sh -

Process Traces:

sudo docker exec -it deeptrace_server python -m cli.src.cmd asso algo deeptrace sudo docker exec -it deeptrace_server python -m cli.src.cmd assemble -

View Results: Access Kibana at

http://YOUR_SERVER_IP:5601

Expected Trace Data

With Social Network, you should see rich trace data including:

- Complex service-to-service communication patterns

- Database query traces (MongoDB, Redis, Memcached)

- HTTP and Thrift RPC calls

- Media upload/download operations

- Authentication and authorization flows

- Caching layer interactions

Advanced Features

Enable TLS

For TLS-enabled deployment:

sudo docker-compose -f docker-compose-tls.yml up -d

Enable Redis Sharding

For cache and database sharding:

sudo docker-compose -f docker-compose-sharding.yml up -d

Docker Swarm Deployment

For multi-node deployment:

docker stack deploy --compose-file=docker-compose-swarm.yml social-network

Performance Testing

Load Testing with wrk2

First, build the workload generator:

cd ../wrk2

make

cd ../socialNetwork

Then run various load tests:

# High-throughput compose posts

../wrk2/wrk -D exp -t 20 -c 800 -d 600 -L \

-s ./wrk2/scripts/social-network/compose-post.lua \

http://localhost:8080/wrk2-api/post/compose -R 100

# Mixed workload

../wrk2/wrk -D exp -t 16 -c 600 -d 300 -L \

-s ./wrk2/scripts/social-network/mixed-workload.lua \

http://localhost:8080 -R 50

Troubleshooting

Services Not Starting

# Check individual service logs

sudo docker logs social-network-nginx-thrift

sudo docker logs social-network-user-service

sudo docker logs social-network-compose-post-service

# Check database connectivity

sudo docker logs social-network-mongodb

sudo docker logs social-network-redis

Database Connection Issues

# Restart databases

sudo docker restart social-network-mongodb social-network-redis

# Check database status

sudo docker exec social-network-mongodb mongo --eval "db.stats()"

sudo docker exec social-network-redis redis-cli ping

Memory Issues

The Social Network application is resource-intensive:

# Monitor resource usage

sudo docker stats

# Increase Docker memory limits if needed

# Edit docker-compose.yml and add memory limits

No Network Traffic Captured

- Ensure all services are fully started (can take 2-3 minutes)

- Verify social graph initialization completed

- Check that DeepTrace agent is monitoring the correct containers

- Confirm eBPF programs are loaded for all relevant processes

Cleanup

Stop Social Network Services

sudo bash clear.sh

Complete Cleanup

# Remove all Social Network images

sudo docker rmi $(sudo docker images | grep social | awk '{print $3}')

# Clean up volumes

sudo docker volume prune -f

# Remove networks

sudo docker network prune -f

Monitoring and Observability

Built-in Jaeger Tracing

The Social Network application includes Jaeger tracing:

# Access Jaeger UI

http://localhost:16686

Custom Metrics

Monitor application metrics:

# Service health endpoints

curl http://localhost:8080/health

curl http://localhost:8081/health

# Database metrics

sudo docker exec social-network-mongodb mongo --eval "db.serverStatus()"

sudo docker exec social-network-redis redis-cli info

Development and Customization

Modifying Services

The Social Network application is actively developed. You can:

- Modify service configurations in

config/ - Customize workload scripts in

wrk2/scripts/ - Adjust Docker Compose configurations

- Add custom monitoring and logging

Building from Source

# Build custom images

sudo docker-compose build

# Build specific services

sudo docker-compose build user-service

sudo docker-compose build compose-post-service

Next Steps

- BookInfo Application: Try a simpler microservices application

- Trace Analysis: Learn advanced trace analysis techniques

- Basic Usage: Explore DeepTrace operations

- Database Management: Configure advanced Elasticsearch setups

Trace Analysis

This section covers advanced trace analysis techniques and tools for understanding distributed system behavior through DeepTrace data.

Overview

Trace analysis helps you:

- Identify performance bottlenecks in distributed systems

- Understand service dependencies and communication patterns

- Debug complex issues across multiple services

- Monitor system health and reliability

Analysis Tools

Kibana Dashboard

Access the primary analysis interface through Kibana:

URL: http://YOUR_SERVER_IP:5601

Username: elastic

Password: YOUR_ELASTIC_PASSWORD

Key Analysis Features

| Feature | Description | Use Case |

|---|---|---|

| Discover | Search and filter traces | Find specific requests or errors |

| Visualize | Create charts and graphs | Monitor trends and patterns |

| Dashboard | Combine multiple visualizations | System overview and monitoring |

Trace Data Structure

Span Information

Each span contains:

- Trace ID: Links spans belonging to the same request

- Span ID: Unique identifier for each span

- Parent ID: Creates the trace hierarchy

- Service Name: Identifies the source service

- Operation: Specific function or endpoint

- Duration: Time taken for the operation

- Tags: Additional metadata and labels

Correlation Data

DeepTrace provides correlation information:

- Network connections: TCP/UDP connection details

- Process information: PID, container ID, host details

- Timing data: Precise timestamps and latencies

- Protocol data: HTTP, database, and other protocol specifics

Analysis Techniques

Performance Analysis

Identify slow requests:

- Sort traces by duration

- Examine longest-running spans

- Analyze service-to-service latencies

- Look for patterns in slow operations

Example Kibana query:

duration:>1000 AND service.name:"product-page"

Error Analysis

Find failed requests:

- Filter by error status codes

- Examine error messages and stack traces

- Correlate errors across services

- Identify error propagation patterns

Example Kibana query:

tags.http.status_code:>=400 OR tags.error:true

Dependency Analysis

Understand service relationships:

- Map service-to-service communications

- Identify critical path dependencies

- Analyze communication patterns

- Detect circular dependencies

Traffic Pattern Analysis

Monitor system behavior:

- Analyze request volume over time

- Identify peak usage periods

- Monitor service load distribution

- Detect unusual traffic patterns

Common Analysis Scenarios

Debugging Slow Requests

-

Find the slow trace:

- Sort by duration in Kibana

- Identify traces exceeding SLA thresholds

-

Analyze the trace structure:

- Examine span hierarchy

- Identify the slowest spans

- Check for blocking operations

-

Investigate root causes:

- Database query performance

- Network latency issues

- Resource contention

- External service delays

Service Health Monitoring

-

Error rate monitoring:

- Track error percentages by service

- Set up alerts for threshold breaches

- Monitor error trends over time

-

Latency monitoring:

- Track response time percentiles

- Monitor SLA compliance

- Identify performance degradation

-

Throughput analysis:

- Monitor request volume

- Analyze capacity utilization

- Plan for scaling needs

Capacity Planning

-

Resource utilization:

- Analyze service load patterns

- Identify bottleneck services

- Monitor growth trends

-

Scaling decisions:

- Determine which services need scaling

- Understand traffic distribution

- Plan infrastructure changes

Best Practices

Effective Querying

- Use specific time ranges to improve query performance

- Combine multiple filters for precise results

- Save useful queries for repeated analysis

- Use wildcards carefully to avoid performance issues

Dashboard Creation

- Group related metrics on the same dashboard

- Use appropriate visualization types for different data

- Set up refresh intervals for real-time monitoring

- Share dashboards with team members

Alert Configuration

- Set meaningful thresholds based on SLA requirements

- Avoid alert fatigue with appropriate sensitivity

- Include context in alert messages

- Test alert conditions before deployment

Advanced Analysis

Custom Visualizations

Create specialized charts for:

- Service dependency graphs

- Request flow diagrams

- Performance heat maps

- Error correlation matrices

Data Export

Export trace data for:

- External analysis tools

- Long-term storage

- Compliance reporting

- Machine learning analysis

Integration with Other Tools

Connect DeepTrace data with:

- APM tools for enhanced monitoring

- Log aggregation systems

- Metrics collection platforms

- Incident management systems

Next Steps

- Web UI: Explore the web-based monitoring interface

- Database Setup: Advanced Elasticsearch configuration

- Basic Usage: Learn essential DeepTrace operations

Web Interface

The DeepTrace web interface provides an intuitive user experience, enabling users to interact with the system effortlessly. Through the collaboration of the frontend, backend, and database, the system efficiently processes data and delivers real-time feedback.

UI Architecture

graph TD

UI_Frontend -->|Calls API| UI_Backend

UI_Backend -->|Fetches data| ElasticDB[Elastic Database]

UI_Frontend -->|Displays data| User[End User]

Component Descriptions

-

UI Frontend

TheUI_Frontendis the user-facing component of the system. It is responsible for rendering the interface and interacting with the user. The frontend communicates with the backend via APIs to fetch and display data.- Code Repository: DeepTrace Frontend Repository

-

UI Backend

TheUI_Backendacts as the intermediary between the frontend and the database. It provides APIs for the frontend to call and handles data processing, business logic, and communication with the database.- Code Repository: DeepTrace Backend Repository

-

Elastic Database

TheElasticDBis the data storage component of the system. It stores all the necessary data and allows the backend to query and retrieve information as needed. It is optimized for search and analytics, making it suitable for handling large datasets efficiently.

Deployment Instructions

Backend

To deploy the backend, follow these steps:

-

Clone the Repository

Clone the backend code repository using the following command:git clone https://github.com/DeepShield-AI/DeepTrace-server.git cd DeepTrace-server -

Modify Configuration File

Update the following properties in the configuration file located at application.properties:spring.elasticsearch.uris=http://xxx spring.elasticsearch.username=xxx spring.elasticsearch.password=xxx -

Build the Project

Run the following commands to build the backend:chmod +x mvnw sudo docker run --privileged --rm -it -v $(pwd):/app docker.1ms.run/maven:3.9.6-eclipse-temurin-17 bash -c "cd /app; ./mvnw clean package" -

Run the Application

Start the backend application using the following command:java -jar ./start/target/start-0.0.1-SNAPSHOT.jar

Frontend

To deploy the frontend, follow these steps:

-

Clone the Repository

Clone the frontend code repository using the following command:git clone https://gitee.com/qcl_CSTP/deeptrace-platform-side.git cd deeptrace-platform-side -

Modify Configuration File

Update the necessary configuration settings. TODO: Add specific configuration details here. -

Install Dependencies

Ensure that Node.js and npm are installed. If not, install them using the following commands:sudo apt update sudo apt install -y nodejs npmThen, install the project dependencies:

npm install -

Run the Application

Start the frontend application using the following command:npm start

UI Functionality Description

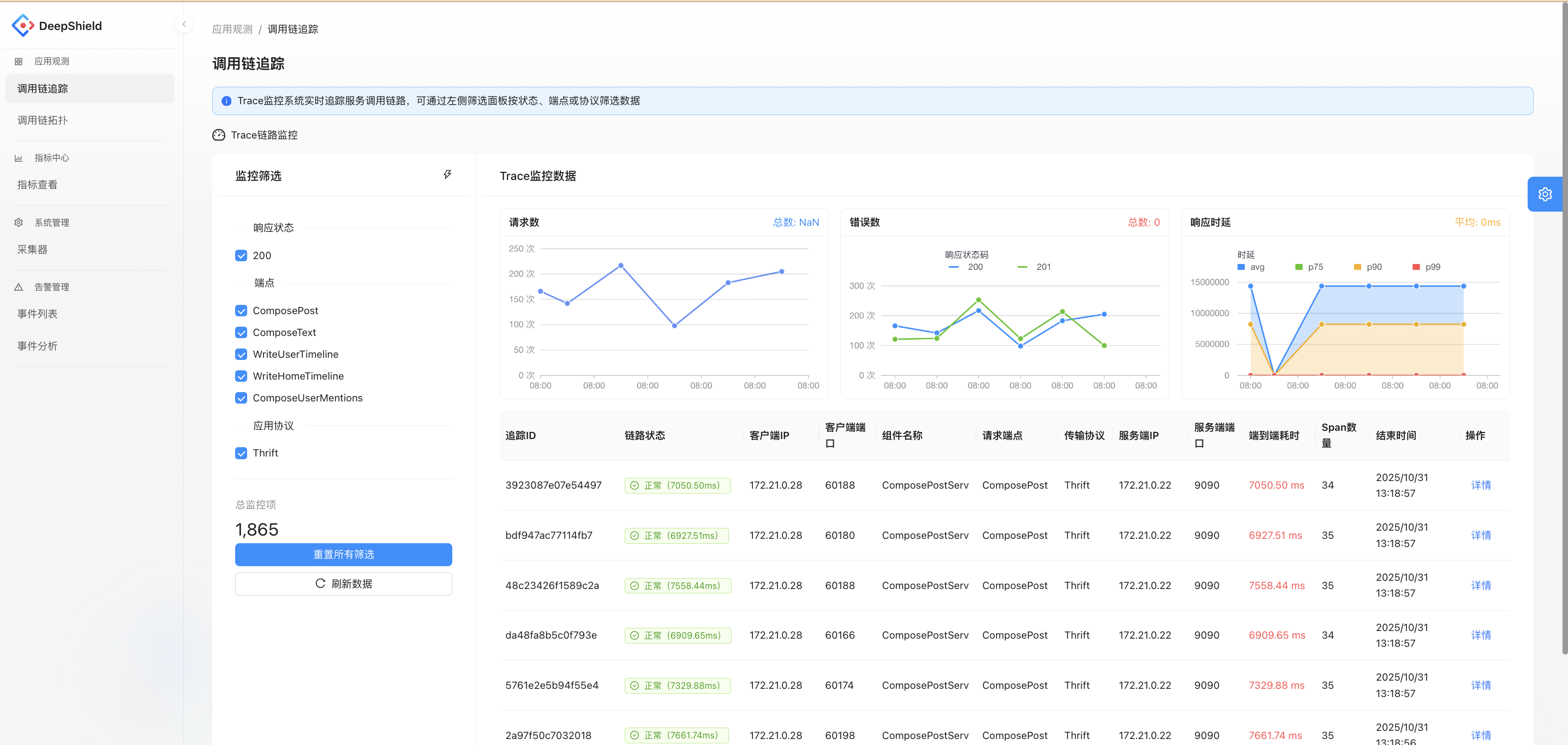

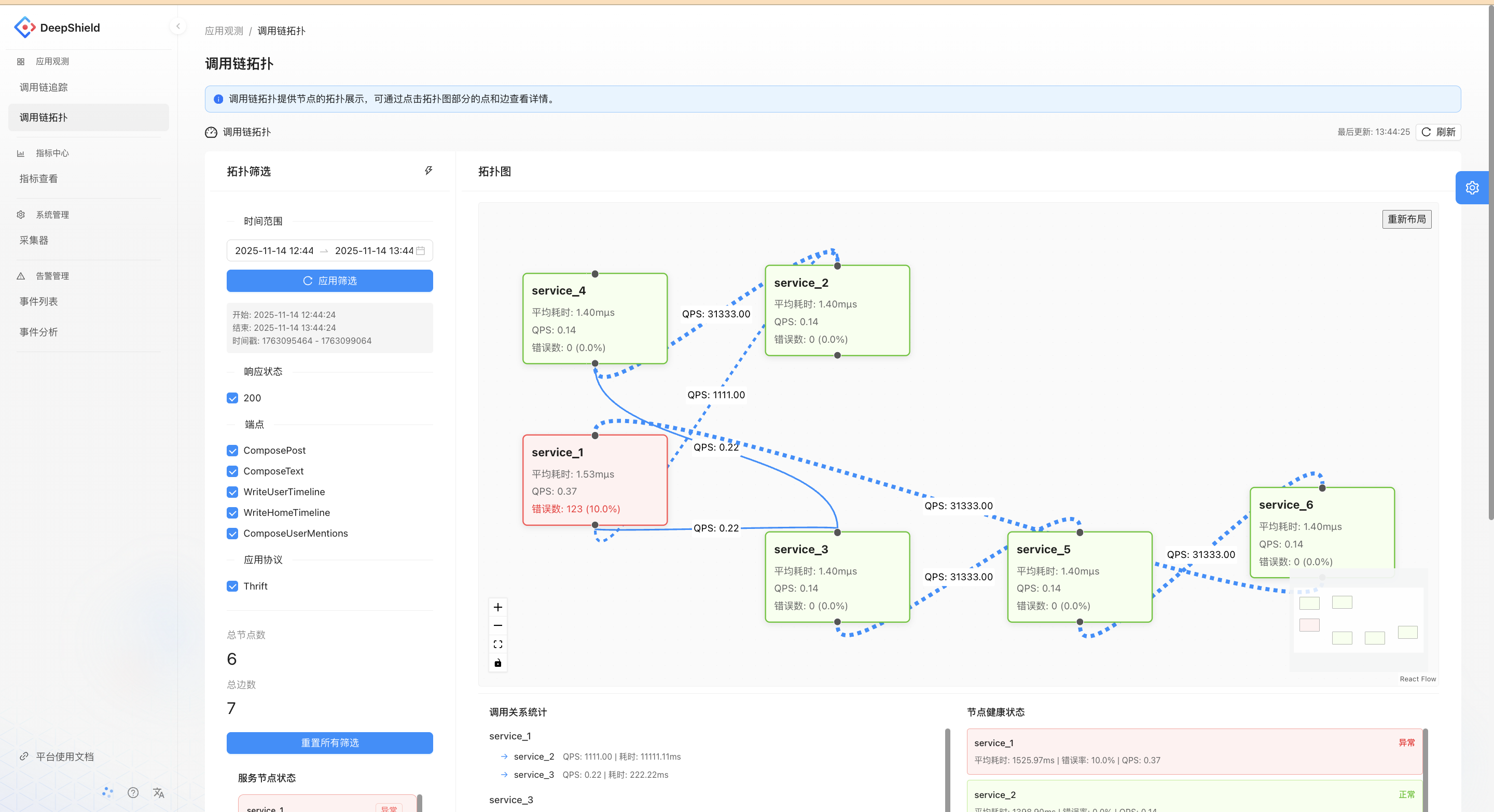

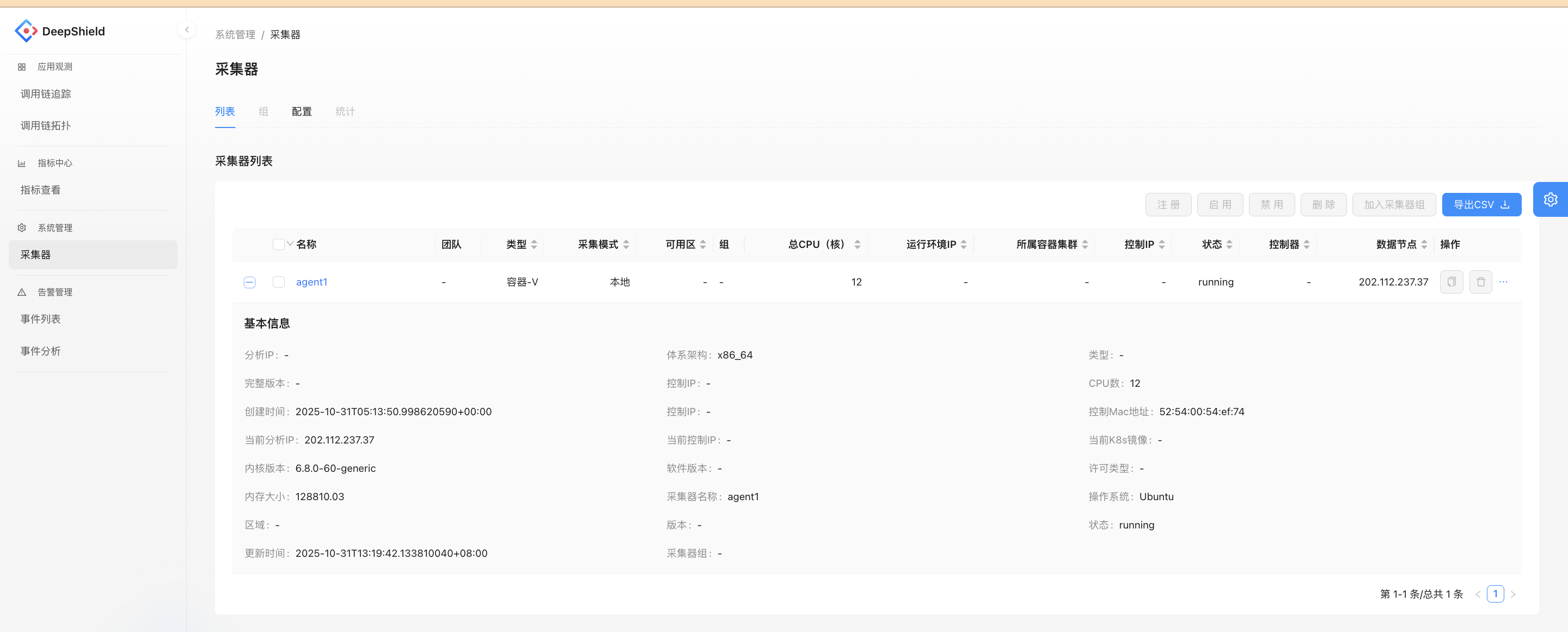

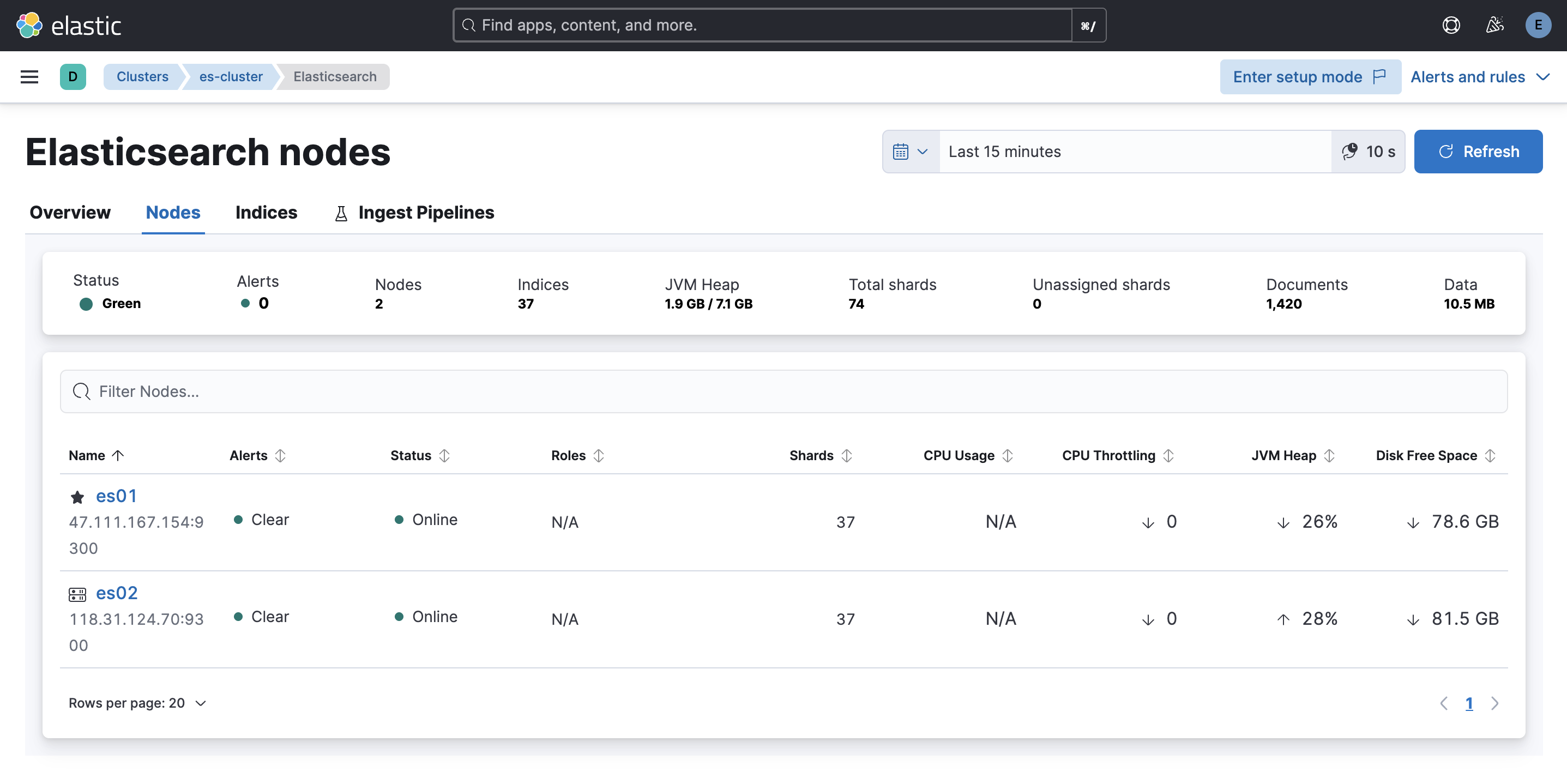

Trace Chain Tracking Module